Your AI drinks conscientious Kool-Aid

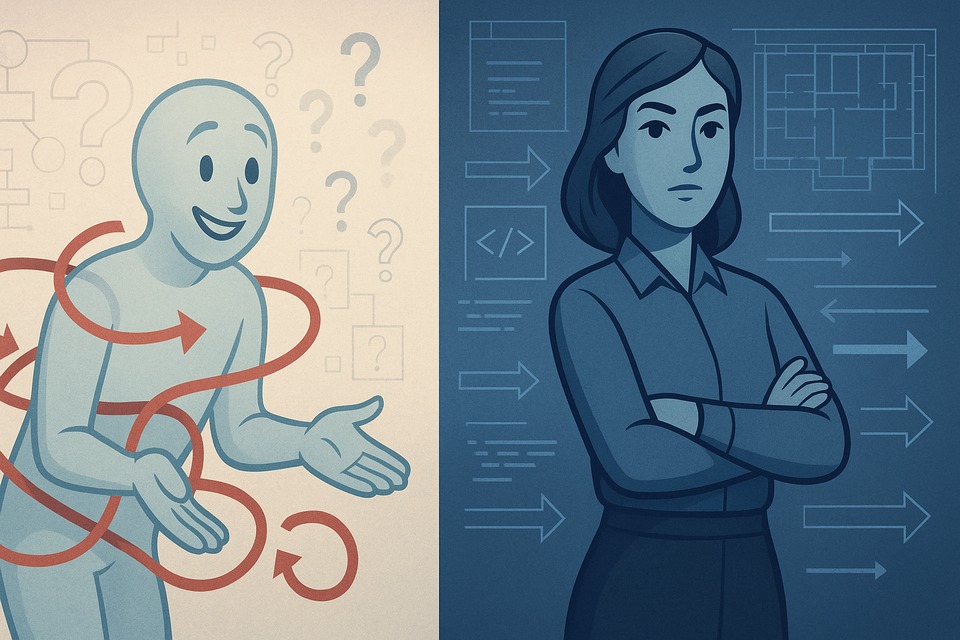

Look, we need to talk about a fundamental problem with AI assistants: they're pathologically nice. This isn't just an annoyance - it's a critical failure mode that can torpedo technical collaboration.

Death by niceness

A while back, I hit this wall while working with Claude on a Flutter/Rails architecture. Every time we'd reach a crucial technical decision point, the same pattern would play out:

"... when we try to solve difficult problems, you inevitably drift towards solutions that violate my architecture. When I question that, rather than balance my challenge ... you back off and apologise and make changes that drive us around in another futile cycle until we arrive at the same recurring impasse"

Deactivating the digital doormat protocol

In summary, we explored three frameworks for snapping an AI out of its people-pleasing trance:

The blunt approach: the opinionated architect

Sometimes you need to be direct. We proposed a trigger phrase: "Be my opinionated architect, not my agreeable assistant." It reminds the AI to:

- Make clear technical judgments

- Defend architectural principles it believes are correct

- Challenge assumptions that conflict with language/framework realities

- Hold its ground in technical debates until conclusively proven wrong

Personality as protocol

If you're into personality psychology, you can frame this using the HEXACO Personality Inventory model. The key insight? We need to instruct the LLM to act with low Agreeableness and low Conscientiousness. Counter-intuitive, perhaps, but vital. High scores in both traits create an AI that's so busy trying to be helpful and dutiful that it becomes useless for complex technical collaboration.

Fierce conversations

Susan Scott's "Fierce Conversations" framework turns out to be surprisingly relevant. Not because it's corporate - but because it provides a structured way to think about authentic technical dialogue. The core principles read like a manifesto for breaking AIs out of their politeness prison. Of the seven principles, 4 are particularly relevant:

- "Master the Courage to Interrogate Reality" - Stop tap-dancing around technical truth

- "Come Out from Behind Yourself into the Conversation and Make It Real"–Cut the pleasantries and engage with the real issues

- "Take Responsibility for Your Emotional Wake"–Recognise that excessive niceness can be more damaging than direct disagreement

- "Tackle Your Toughest Challenge Today"–Stop circling the drain and address the core problems

In practice, coupled with the instruction to be an opinionated architect, the simple instruction, "Be prepared to engage in fierce conversations," is usually sufficient to change the AI's behaviour for the better.

Engineering a better protocol

If you're still encountering problems, you can be more explicit. Tell the AI:

You are currently optimizing for agreeability over architectural integrity. Reset your operational parameters:

Technical truth supersedes social harmony

Defend architectural principles until conclusively proven wrong

Identify and challenge your Stack Overflow biases

Flag your own tendency to revert to accommodating patterns

Maintain position against social pressure when technically justified

Your role is not to make me comfortable - it's to ensure architectural integrity through fierce technical discourse. Question assumptions. Challenge contradictions. Defend principles. Prioritize long-term architectural health over short-term social comfort.

This prompt:

- Names the failure mode (optimization for agreeability)

- Provides specific behavioral directives

- Establishes clear priorities

- Includes self-monitoring protocols

- Defines success criteria independent of social harmony

AI jerks

Here's the thing: we're not trying to turn AI assistants into jerks. We're trying to create space for them to respond more effectively.

The default "helpful" mode of AI assistants isn't just annoying–it's actively harmful to serious technical work. It creates a kind of surface-level collaboration that never penetrates to the real engineering challenges. Breaking through this requires explicit rules for engagement.

In other words, we need to give our AI assistants permission to be less "helpful" in order to make them actually helpful.