Why your AI implementation still feels like Groundhog Day

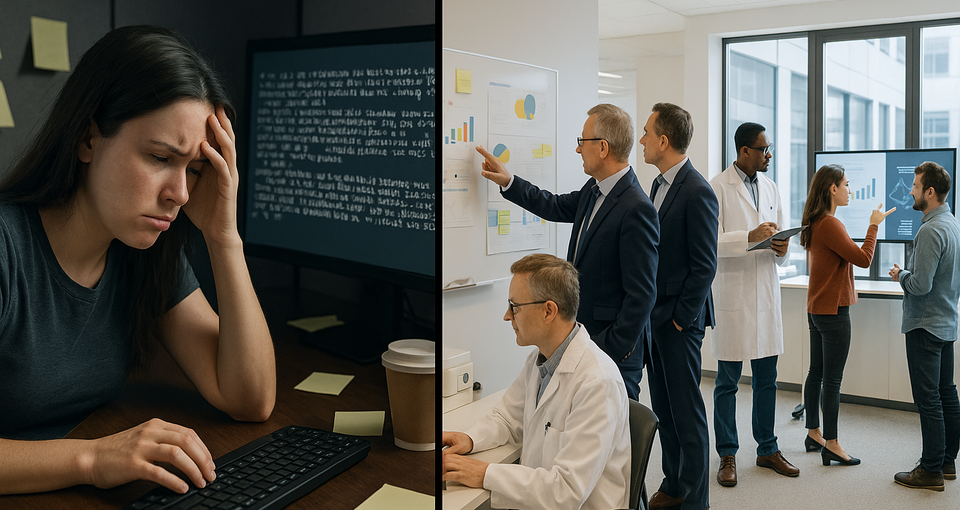

Every morning, your organisation's AI champion (maybe that's you) logs in with fresh optimism. They run their successful prompt from yesterday. And they regard the result with stomach-leadening horror. The context has drifted. The output feels generic. So they (you) tweak, adjust, and eventually recreate something that worked perfectly just 24 hours ago.

Sound familiar?

This isn't a technical problem—it's an organisational learning problem disguised as one. And it perfectly illustrates why Ethan Mollick's recent article, "Making AI Work: Leadership, Lab, and Crowd" lands so accurately. The gap between individual AI wins and organisational transformation isn't about better models or smarter prompts. It's about building what Peter Senge called in The Fifth Discipline a "learning organisation".

Now we need to build on all that for learning organisations of the AI age.

The framework that explains everything

Mollick observes that AI use that boosts individual performance does not naturally translate to improving organisational performance. Despite workers reporting 3x productivity gains from AI use, companies are seeing minimal organisational impact. The problem? Most organisations have outsourced innovation to consultants for so long that their internal innovation muscles have atrophied (the systems archetype for this is called "Shifting the Burden").

The solution lies in three keys to AI transformation:

- Leadership: Creating vision, incentives, and psychological safety for AI experimentation

- Lab: Systematic experimentation, benchmarking, and rapid prototyping

- Crowd: Employee-driven discovery and knowledge sharing

This framework essentially applies Senge's Fifth Discipline principles to AI transformation that bakes organisational learning into the culture as quickly as the technology evolves, by building shared vision and mental models that promote team learning and encourages personal mastery.

The three keys

While I'd encourage you to read Mollick's full article, here's a quick summary of the three keys.

Leadership: Most leaders paint abstract visions ("AI will transform our business!") without the vivid, specific pictures that actually motivate change. Workers need to know: Will efficiency gains mean layoffs or growth? How will AI use be rewarded? What does success actually look like day-to-day?

Lab: Organisations lack systematic approaches to capture what works, benchmark AI performance for their specific use cases, and distribute successful discoveries. They're stuck in analysis paralysis or random tool syndrome—either endless strategy meetings or throwing AI tools at problems without coherent learning.

Crowd: Employee– who Mollick nicknames "Secret Cyborgs"–are hiding their AI use because incentives reward secrecy over sharing. Meanwhile, others remain paralyzed by vague ethics policies or fear of appearing incompetent.

Where knowledge management becomes strategic advantage

Here's where the framework becomes actionable: The organisations winning at AI are those solving the knowledge capture and distribution challenge. They're turning individual AI discoveries into organisational capabilities.

For Leadership: Instead of abstract AI promises, leaders can demonstrate specific speedily-generated AI-powered workflows, showing rather than telling what the future looks like. They can model AI use in real-time during meetings, creating those "vivid pictures" Mollick emphasizes.

For the Lab: Rapid prototyping becomes systematic when successful prompts, personas, and workflows can be captured, tested across different models, and instantly distributed. That context drift problem? You manage it when your Lab builds organisational benchmarks and maintains prompt libraries that evolve with your understanding.

For the Crowd: Secret Cyborgs become Sanctioned Innovators when they have professional platforms to experiment, document successes, and share discoveries without fear. The scattered individual wins become organisational learning.

A case study in context drift

Consider that client struggling with LLM context drift and hallucination. Through Mollick's framework this isn't a technical problem—it's all three keys failing simultaneously:

- Leadership hasn't created clear guidelines for what "good enough" AI output looks like for their specific use cases

- Lab isn't systematically testing and benchmarking what works across different models and contexts

- Crowd isn't capturing and sharing the successful prompts and approaches individuals discover

The solution is more than better prompts—it's building systematic knowledge management that captures what works, when it works, and why it works. When the next model update arrives, you're not starting from scratch—you're building on documented organisational learning.

From random tool syndrome to strategic advantage

The companies that will win the AI transformation aren't necessarily those with the biggest AI budgets or the most sophisticated technical teams. They're the ones building the fastest learning loops between Leadership, Lab, and Crowd.

This means:

- Capturing successful AI interactions systematically, not hoping individuals remember what worked

- Testing AI performance against your actual business tasks, not generic benchmarks

- Distributing discoveries immediately across the organisation, not waiting for training programs

- Iterating based on real performance data, not theoretical possibilities

As Mollick notes, "The time to begin isn't when everything becomes clear—it's now, while everything is still messy and uncertain. The advantage goes to those willing to learn fastest."

The question isn't whether your organisation will transform with AI. It's whether you'll build the learning systems to do it systematically, or keep reliving the same context drift frustrations every morning.

Ethan Mollick's "Making AI Work: Leadership, Lab, and Crowd" is published in One Useful Thing.